Archive

AWS S3 Storage – Access S3 Storage On-Premesis

There are couple of ways provided to access S3 Storage On Premesis. To access S3 Storage on-premises you need to configure Storage Gateways.There are 2 ways to do so

Gateway-Cached volumes: Maintain local, low-latency access to your most recently accessed data while storing all your data in Amazon S3.

You will need a host in your datacenter to deploy the gateway virtual machine (VM). Pick a host that meets these minimum requirements.

This support to virtualization technology Windows Hyper-V and VMware.

You have to download the Virtual machine provided by Amazon AWS which is created for this purpose only.You need to import the virtual machine on Hyper-V or VMWare ESX.

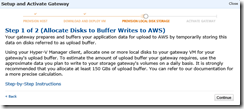

Your gateway prepares and buffers your application data for upload to AWS by temporarily storing this data on disks referred to as upload buffer.

Using your Hyper-V Manager client, allocate one or more local disks to your gateway VM for your gateway’s upload buffer. To estimate the amount of upload buffer your gateway requires, use the approximate data you plan to write to your storage gateway’s volumes on a daily basis. It is strongly recommended that you allocate at least 150 GBs of upload buffer.

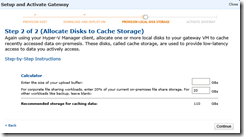

Again using your Hyper-V Manager client, allocate one or more local disks to your gateway VM to cache recently accessed data on-premesis. These disks, called cache storage, are used to provide low-latency access to data you actively access.

Gateway-Stored Volumes: Schedule off-site backups to Amazon S3 for your on-premises data.

AWS S3 Storage – PowerShell – Part-1

To manage AWS services and resources ,AWS has provided AWS Tools for Windows PowerShell.Using this you can manage your AWS services / Resources.

In this post we will go-over on how to connect AWS S3 Storage / Create/Manage/Delete Buckets using Windows PowerShell?

Before we start a little information about WHAT IS AWS S3 ?

The 3 S stands for (Simple Storage Service), this service in short provides businesses/developers/and technology enthusiast to secure,durable,highly-scalable object storage.

S3 is pretty each to use , if you just want to use S3 services to upload and download data you don’t need know any programming language , you can do this with few simple clicks.

In case you want to provide your stored data to be seen / downloaded by your clients you can easily do it in few click (choose the folder and click Actions—>Make Public).

With Amazon S3, you pay only for the storage you actually use. There is no minimum fee and no setup cost.

I you have not downloaded AWS Tools for Windows PowerShell flow the post to do so.

To Start you can use AWS provided PowerShell console or you can use the windows PowerShell console.

AWS Console is preconfigured to use AWSPowerShell Module / with windows PowerShell Console you can Import the AWS Module

Import-Module “C:\Program Files (x86)\AWS Tools\PowerShell\AWSPowerShell\AWSPowerShell.psd1”

Initialize the [Initialize-AWSDefaults]

Lets start .

- Open PowerShell and Import the AWS PowerShell Module

- Once you have created the AWS Profile Initialize-AWSDefaults will by default chose the same profile.

Now see how many Buckets you have associate to your S3 Storage

If there are no S3 Buckets , lets create one

- New-S3Bucket -BucketName dbproximagebucket

- To create Folder / Directory in a Bucket there are no PowerShell Commands at present. But , We can do it by creating a empty folder on local computer with a text file and upload it to the S3 Bucket, while uploading you have to pass the KeyPrefix parameter which is actually a foldername under the bucket.Recurse will uploaded all the subfolders and filesWrite-S3Object -BucketName dbproximagebucket -KeyPrefix Imagesdbprox -Folder “C:\Upload _To_S3Bucket” –Recurse

- List out what we got in S3 Storage Bucket

As you can see in the above image i have just one folder with a text file, lets upload some data within folders and subfoldersGet-S3Object -BucketName dbproximagebucket | select Key,Size

-BucketName : This is our main bucket in which all the data is uploaded.

The output column Key holds the full path of the file

ProjectFiles (Root Folder inside the bucket)/Top10 Hotels(Subfolder inside the root Folder)/Capella_Hotels.jpg (file inside the subfolder)

- Let uploaded the folder /subfolder / files inside a Root folderThe folder Imagesdbprox already existed , we moved couple of folder/subfolder/files in it.

Upload files in a Bucket

Write-S3Object -BucketName dbproximagebucket -KeyPrefix ProjectFiles -Folder “H:\SingaporeProject\Images\GoSinga

porePics\Hotels” -Recurse

Upload files in a Bucket Folder

Write-S3Object -BucketName dbproximagebucket -KeyPrefix Imagesdbprox -Folder “H:\SingaporeProject\Images\GoSing

orePics\Hotels” -Recurse

Get-S3Object -BucketName dbproximagebucket

Get-S3Object -BucketName dbproximagebucket | select Key,Size

List out folder/files with in a root folder

Get-S3Object -BucketName dbproximagebucket -KeyPrefix Imagesdbprox | select Key,Size

List out files in a folder\subfolder

Get-S3Object -BucketName dbproximagebucket -KeyPrefix “Imagesdbprox/Top10 Hotels” | select Key,Size

Delete the Bucket / Bucket with content

If the Bucket is not empty , you will receive below error

Remove-S3Bucket -BucketName dbproximagebucket

Delete the Bucket with content

Remove-S3Bucket -BucketName dbproximagebucket –DeleteBucketContent